Along with a discussion of personal encyclopedias.

There has been a small, barely discernable flurry of activity lately around the

idea of personal knowledge bases—in the same vicinity as personal wikis that I

like to read. (I’ve been a fan of personal encyclopedias since discovering

Samuel Johnson and, particularly, Thomas Browne, as a child—and am always on a

search for the homes of these types of individuals in modernity.)

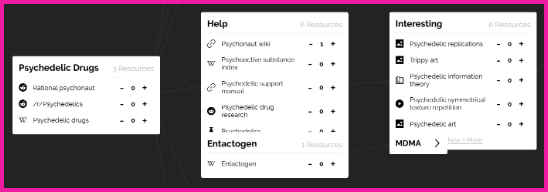

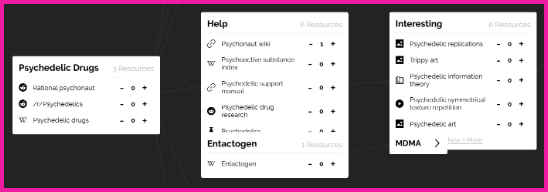

Nikita’s wiki is the most established of those I’ve seen so far, enhanced by the

proximity of Nikita’s Learn Anything, which

appears to be a kind of ‘awesome directory’ laid out in a hierarchical map.

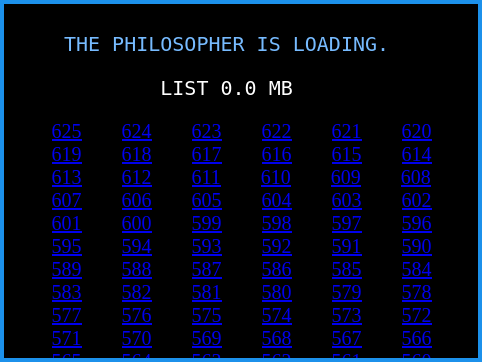

Another project that came up was Ceasar Bautista’s

Encyclopedia, which I installed

to get a feel for. You add text files to this thing and it generates nice pages

for them. However, it requires a bunch of supporting software, so most people

are probably better served by TiddlyWiki. This encyclopedia’s main page is a

simple search box—which would be a novel way of configuring a TiddlyWiki.

I view these kinds of personal directories as the connecting tissue of the Web.

They are pure linkage, connecting the valuable parts. And they, in the sense

that they curate and edit this material, are valuable and generous works. To

be an industrious librarian, journalist or archivist is to enrich the

species—to credit one’s sources and to simply pay attention to others.

I will also point you to the Meta

Knowledge repo, which

lists a number of similar sites out there. I am left wondering: where does this

crowd congregate? Who can introduce me to them?

Reply: Strategy: Minimize

Took a look at your blog—it’s sweet! I will be sure to include it in my next href hunt. I enjoyed the article about Dat and am interested in finding others who write about practical uses of the ‘dweb’—unfortunately, many of the links on Tara Vancil’s directory are ‘broken’ (perhaps ‘vanished’ is more correct?) and I’m not sure how to discover more.

At any rate, good to meet you!